(Not So) Safe{Wallet}: GitHub Actions Risks Impacting Safe''s Frontend

Introduction

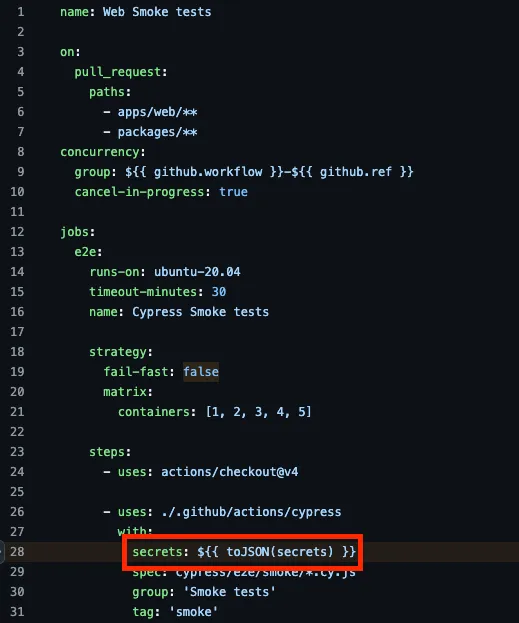

On February 21st, hackers associated with the North Korea based Lazarus group stole almost 1.4 Billion dollars in Ethereum from Bybit, the third largest cryptocurrency exchange in the world. Lazarus pulled off this hack through a sophisticated operation that tricked legitimate signers into approving a malicious smart contract interaction. Bybit’s signers saw a legitimate transaction, but they ended up signing a malicious one. The night of the attack, Safe quickly claimed that they were not hacked.

Ultimately, Safe’s conclusion was false, and the root cause of the hack was Lazarus briefly modifying Safe’s frontend Javascript code with a backdoor designed to only modify transactions associated with Bybit’s cold wallet. Web3 companies tend to spend a lot of time, money and resources on smart-contract and “on-chain” security. They advertise massive bug bounties and sponsor audit competitions to attract the best security researchers to find bugs in their ecosystem.

Web3’s Achilles Heel

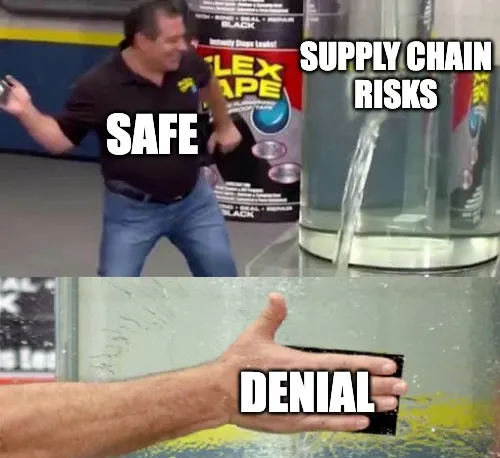

Web3 companies have an interesting relationship with supply chain security. Some companies take it seriously, while others stick to blockchain-only and only care about smart contracts. As a result, these risks are often ignored and not reported. But guess what? Lazarus just cares about your funds, they’ll hack Web2 and Web3 to get it. And they don’t give a damn about what a companies valid security issue scope is.

Anyone in the supply chain security space knew this was coming. The ledger connect incident, the Solana Web3 JS attack, the DigWifTools hack, the list goes on. These were relatively small attacks compared to the larger smart contract or key theft attacks. The attacks detected quickly and impacted funds in the high-six to low seven figures.

The writing was on the wall, if Lazarus wasn’t already focusing on supply chain attacks, then they were now. As soon as I saw the Bybit’s statement that the URL was normal and they did not find malware, I had a suspicion of how it went down.

It turns out, that’s exactly how it happened. The preliminary report by Verichains confirmed that Lazarus used a compromised Safe credential to replace a set of Javascript files with a backdoor.

Conclusion from Verichains.io Forensic Analysis

The largest hack in Web3 history was a supply chain attack.

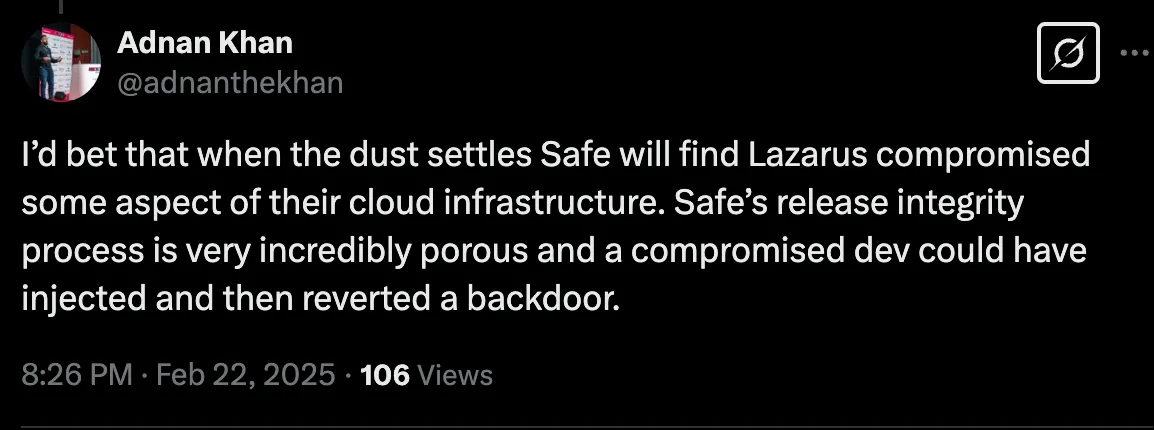

Safe’s initial response was pure denial. Which is NOT what you do when you aren’t sure if someone did breach your systems. On evening of the hack, Safe tweeted the following:

( https://x.com/safe/status/1893105625439093094)

If you do not detect a malicious modification, then “No unauthorized access to infrastructure was detected in the logs” is technically true, but not for the reasons we want. Many were surprised how Safe, a company with such a critical product for securing funds, could not protect their production infrastructure. This wasn’t surprising to me at all, it just took a look at their GitHub to see how much they value release integrity.

Shortly after the hack, I started looking at Safe’s GitHub CI/CD configuration and came to the conclusion:

Any Safe developer could backdoor the public CLI, frontend, and SDK releases due to several access control issues and CI/CD bad practices.

While there is currently no evidence the hack involved GitHub, it could happen again through a GitHub Actions attack if Lazarus obtains GitHub credentials belonging to a Safe developer.

How would you hack Safe?

DISCLAIMER: This blog post does not disclose directly exploitable vulnerabilities. The post is simply an analysis of the current state of Safe’s CI/CD process. In order to exploit any of this, an attacker would first need access to a Safe developer’s GitHub credentials. Based on discussions with other researchers who had reports poorly handled by Safe and Safe’s handling of the Bybit hack, it is in the best interest of the community to publicly disclose this because it allows for more eyes on the repository.

On Feb 26th, Safe made multiple changes to their release process, including adding release attestation signing ( https://github.com/safe-global/safe-wallet-monorepo/attestations/5209853). While this is a step in the right direction, there are still gaps.

Let’s break them down.

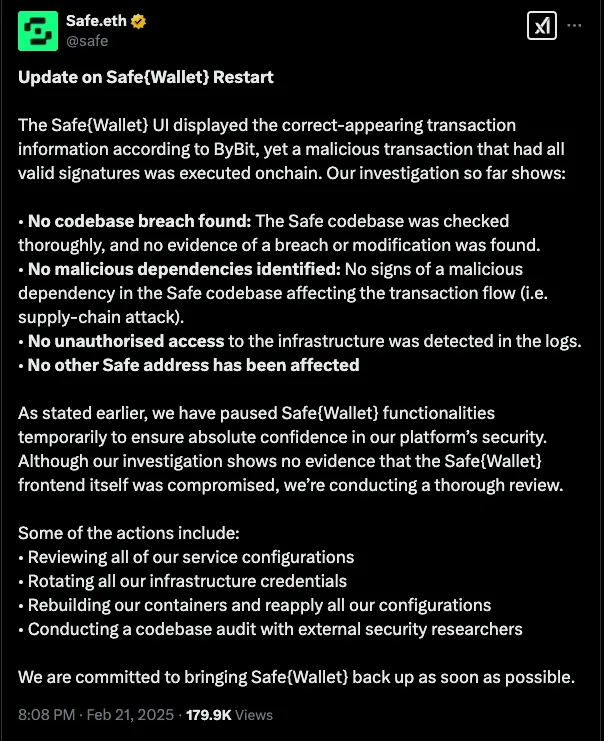

Target: Frontend

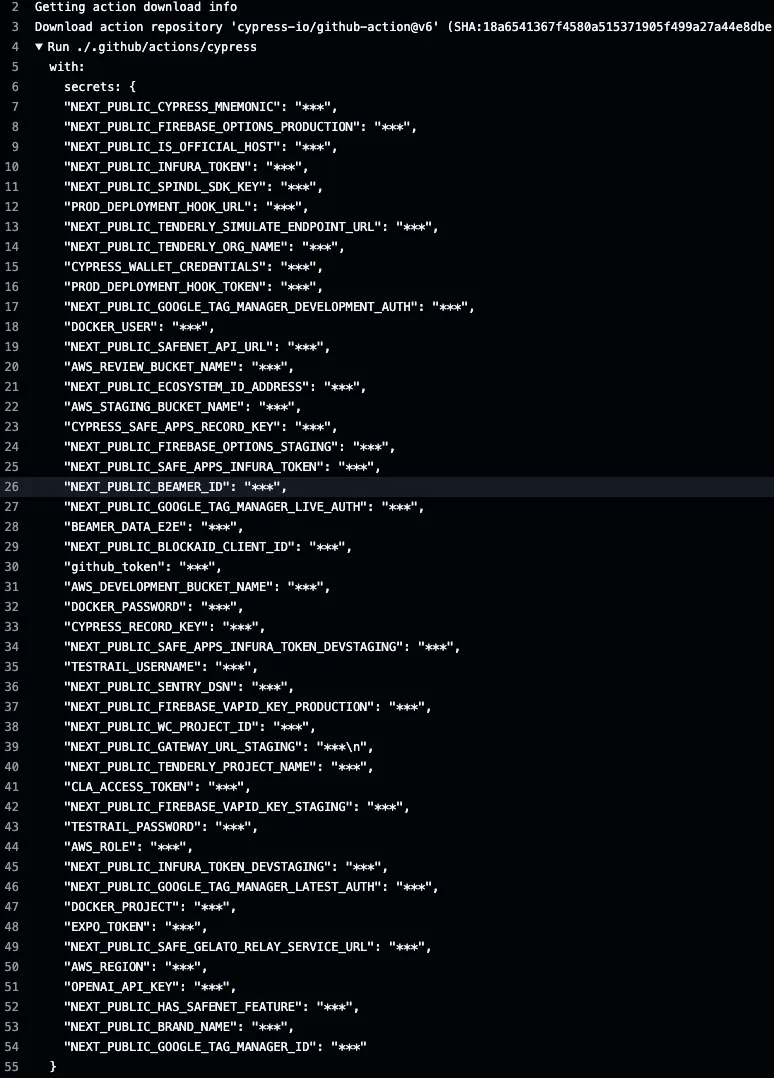

I took a look at the safe-wallet-monorepo, which holds the code for the Safe app at https://app.safe.global/. Immediately, I noticed that repository uses an anti-pattern of passing actions secrets into the toJSON( ) function in several workflows. The problem with this is that this exposes ALL secrets accessible to the repository in that workflow.

We can confirm this by looking at the run logs. GitHub does not show the secrets, but now we know the names of all secrets the repository can access.

If someone compromises a repo scoped access token belonging to a developer with write access to the repo, they would only need to make a pull request with a modified action file to capture all of these secrets.

Problem: Lack of Segmentation Between Dev and Release Flow

As of February 26th, the repository still uses OIDC federation to upload both development and production releases to S3. The way GitHub OIDC federation works is that the workflow receives a signed claim from GitHub tying it to the specific workflow. The workflow then exchanges the signed claim for AWS session credentials. You can read more about it here: https://aws.amazon.com/blogs/security/use-iam-roles-to-connect-github-actions-to-actions-in-aws/

Staging Workflow

- name: Configure AWS credentials

uses: aws-actions/configure-aws-credentials@v4

with:

role-to-assume: ${{ secrets.AWS_ROLE }}

aws-region: ${{ secrets.AWS_REGION }}

# Staging

- name: Deploy to the staging S3

if: startsWith(github.ref, 'refs/heads/main')

env:

BUCKET: s3://${{ secrets.AWS_STAGING_BUCKET_NAME }}/current

working-directory: apps/web

run: bash ./scripts/github/s3_upload.sh

# Dev

- name: Deploy to the dev S3

if: startsWith(github.ref, 'refs/heads/dev')

env:

BUCKET: s3://${{ secrets.AWS_DEVELOPMENT_BUCKET_NAME }}

working-directory: apps/web

run: bash ./scripts/github/s3_upload.sh

Production Workflow

- name: Configure AWS credentials

uses: aws-actions/configure-aws-credentials@v4

with:

role-to-assume: ${{ secrets.AWS_ROLE }}

aws-region: ${{ secrets.AWS_REGION }}

- name: Configure AWS credentials

uses: aws-actions/configure-aws-credentials@v4

with:

role-to-assume: ${{ secrets.AWS_ROLE }}

aws-region: ${{ secrets.AWS_REGION }}

# Script to upload release files

- name: Upload release build files for production

env:

BUCKET: s3://${{ secrets.AWS_STAGING_BUCKET_NAME }}/releases/${{ github.event.release.tag_name }}

CHECKSUM_FILE: ${{ env.ARCHIVE_NAME }}-sha256-checksum.txt

run: bash ./scripts/github/s3_upload.sh

working-directory: apps/web

# Script to prepare production deployments

- name: Prepare deployment

run: bash ./scripts/github/prepare_production_deployment.sh

working-directory: apps/web

env:

PROD_DEPLOYMENT_HOOK_TOKEN: ${{ secrets.PROD_DEPLOYMENT_HOOK_TOKEN }}

PROD_DEPLOYMENT_HOOK_URL: ${{ secrets.PROD_DEPLOYMENT_HOOK_URL }}

VERSION_TAG: ${{ github.event.release.tag_name }}The workflows use the same role for development, staging, and production releases, which means the role assumed from the development workflow can technically push to the release staging bucket, and due to the toJSON(secrets) issue, the workflow also has access to the production release web-hook secrets. As a result, anyone who can run code in the dev workflow can indirectly push to the prod staging environment.

Within the production workflow itself we see it copy multiple files to the staging S3 bucket, including a checksum file. GitHub redacts the bucket name as expected because it is defined as an actions secret.

You can see an example file here:

https://app.safe.global/safe-wallet-monorepo-v1.51.3-sha256-checksum.txt

There might be some additional checks between the prod staging environment and prod, so this might not lead to a direct compromise of the prod frontend, but it would still present serious risks.

How could someone hack it?

In order to hack this, an attacker would only need to use the compromised developer token to create a new feature branch in the repository (or re-use an existing one). Then, they could push a code modification to that branch masquerading as normal development, but include additional code to grab secrets from the workflow and enumerate the cloud access.

Once they finish, they can force push off the changes, delete the workflow run logs and most likely no one would notice. If Safe was not monitoring their production release logs, they aren’t proactively checking their GitHub logs - few do.

Problem: Use of Caching in Release Flow

Next, I took a look at how the release workflow actually builds the release artifact. When a maintainer creates a release, it runs a workflow that builds the code and uploads it to a staging S3 bucket. The problem with this workflow is the extensive consumption of the GitHub Actions Cache. GitHub Actions caching can be used to run arbitrary code in consuming workflows if you can poison it.

I’ve blogged about it in Monsters in your Build Cache, and recently released some PoC Malware to demonstrate it. There is too much to cover in this post, so I would recommend looking at the resources for more information. The key takeway is that writing to the cache only requires code execution in the main branch or execution in a branch/tag that matches the expected release tag.

The attack vector would allow for a near silent backdoor of the frontend source code. The Ultralytics supply chain attack from December 2024 used this vector to backdoor the release on PyPi.

name: Web Release

on:

release:

types: [published]

jobs:

release:

permissions:

id-token: write

runs-on: ubuntu-latest

name: Deploy release

env:

ARCHIVE_NAME: ${{ github.event.repository.name }}-${{ github.event.release.tag_name }}

steps:

- uses: actions/checkout@v4

- uses: ./.github/actions/yarn

- uses: ./.github/actions/build

with:

secrets: ${{ toJSON(secrets) }}

prod: ${{ true }}

- name: Create archive

run: tar -czf "$ARCHIVE_NAME".tar.gz out

working-directory: apps/web

- name: Create checksum

run: sha256sum "$ARCHIVE_NAME".tar.gz > ${{ env.ARCHIVE_NAME }}-sha256-checksum.txt

working-directory: apps/web

- name: Configure AWS credentials

uses: aws-actions/configure-aws-credentials@v4

with:

role-to-assume: ${{ secrets.AWS_ROLE }}

aws-region: ${{ secrets.AWS_REGION }}

# Script to upload release files

- name: Upload release build files for production

env:

BUCKET: s3://${{ secrets.AWS_STAGING_BUCKET_NAME }}/releases/${{ github.event.release.tag_name }}

CHECKSUM_FILE: ${{ env.ARCHIVE_NAME }}-sha256-checksum.txt

run: bash ./scripts/github/s3_upload.sh

working-directory: apps/web

# Script to prepare production deployments

- name: Prepare deployment

run: bash ./scripts/github/prepare_production_deployment.sh

working-directory: apps/web

env:

PROD_DEPLOYMENT_HOOK_TOKEN: ${{ secrets.PROD_DEPLOYMENT_HOOK_TOKEN }}

PROD_DEPLOYMENT_HOOK_URL: ${{ secrets.PROD_DEPLOYMENT_HOOK_URL }}

VERSION_TAG: ${{ github.event.release.tag_name }}In this case, the workflow consumes the cache within the .github/actions/yarn and .github/actions/build reusable local actions.

GitHub Actions caching works by extracting a tar file with the -P flag set. This means a poisoned cache entry can over-write any file on the GitHub runner’s file system that the runner user has write permission over.

How to hack it?

Starting from a compromised developer token, how could an attacker compromise the releases through cache poisoning?

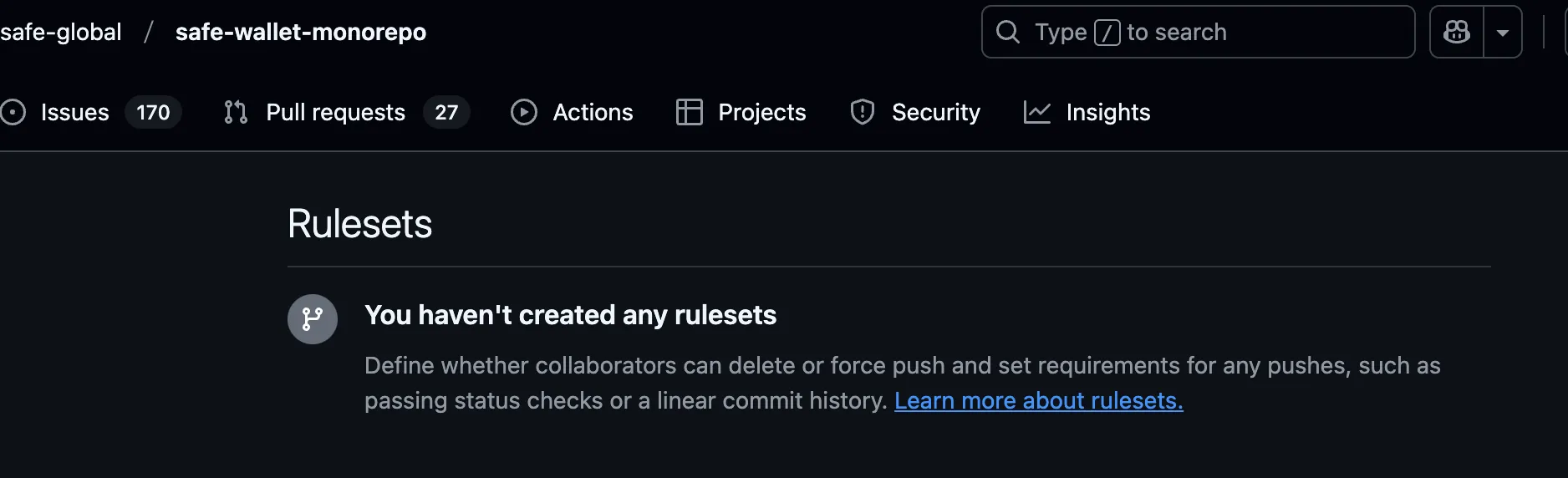

In order to poison the cache for a release, an attacker needs to set poisoned cache entries for the protected dev branch or the release tag. I didn’t find a way to set a main branch entry on the repo without introducing code into the main branch, but I noticed that the repository does not use any tag protection rules.

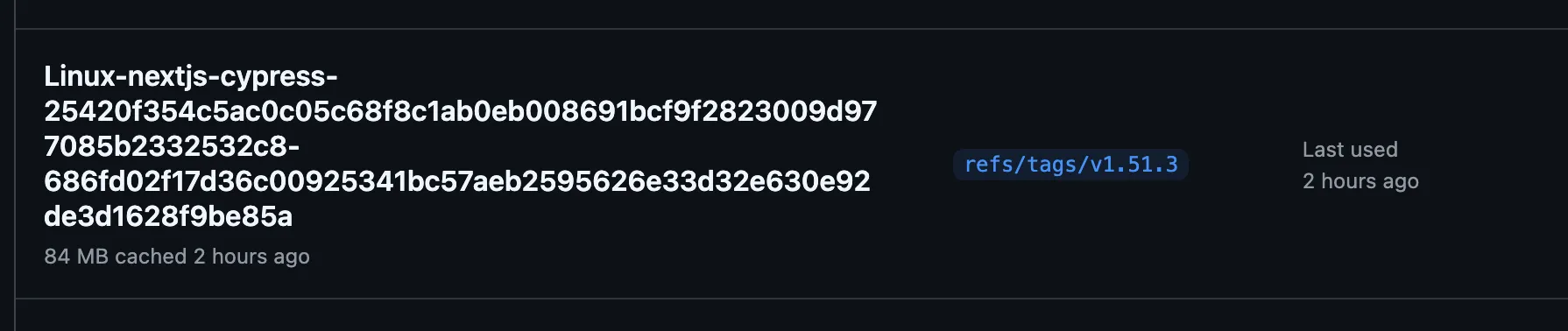

We can see that the production releases use cache values scoped to the tags.

If an attacker simply creates a cache and poisons it with key matching the restore key, then they can run arbitrary code in the production release workflow when it consumes a poisoned cache. They can also poison the frontend code within the build by replacing source code files. In this scenario, the original commit will not have malicious code, only the release will.

- name: Restore Yarn Cache

if: ${{ inputs.mode == 'restore-yarn' }}

id: restore

uses: actions/cache/restore@v4

with:

path: |

**/node_modules

/home/runner/.cache/Cypress

${{ github.workspace }}/.yarn/install-state.gz

${{ github.workspace }}/apps/web/src/types

key: ${{ runner.os }}-web-core-modules-${{ hashFiles('**/package.json','**/yarn.lock') }}

restore-keys: |

${{ runner.os }}-web-core-modules-If someone had a token belonging to a developer with write access to the repository and the repo scope, here is what they could do:

- Modify code used in a workflow that runs on

workflow_dispatch. The code could deploy a slightly modified version of Cacheract set non-default branch cache entries. Configure a poisoned cache entry under the keylinux-web-core-modules- - Commit the change and tag it

v1.51.4 or (another anticipated release). - Cacheract will poison the cache.

- The next time a release workflow runs, the attacker will have arbitrary execution in the workflow.

They can use the poisoned cache to alter the build workflow behavior entirely. They could build and attest to an artifact that does not contain a backdoor, but upload files containing a backdoor to the release environment. Once you have code execution in a production release workflow, only your imagination is the limit for how to achieve end-impact.

What can Safe (and most other Web3 companies) do better?

For critical systems (and after the 1.4 billion hack we can all agree - Safe is critical within the Web3 industry), it is important to isolate the production release from development activities. It should never be possible for a single compromised developer credential to impact production without multiple opportunities for detection. These CI/CD attack techniques also apply to Safe’s SDK and CLI releases.

The reality is, most Web3 companies do not factor single developer compromises into their threat model. This is especially true for developer access to CI/CD.

Take https://github.com/anza-xyz/solana-web3.js for example, the name might sound familiar. Their prod and canary release workflows attempt to restrict dispatch events to the main branch, but the branches filter actually is not supported for workflow_dispatch.

name: Publish Canary Releases

on:

workflow_dispatch:

branches:

- mainname: Publish Packages

on:

workflow_dispatch:

branches:

- main

push:

branches:

- mainThis means that any developer token with just repo scope (or GITHUB_TOKEN with actions: write and contents: write) can create a feature branch with modified code, issue a workflow dispatch event, and steal the NPM Token. Now you have a repeat of the supply chain attack.

They also don’t restrict GITHUB_TOKEN permissions, which means and compromised upstream dependency could contain a payload to steal the Solana Web3 JS NPM token.

Supply chain security is hard, and once you add upstream and direct compromises into the mix, almost every Web3 company is susceptible to attacks causing loss of funds. I could go on and list CI/CD holes in most Web3 companies in the event of developer or developer token compromise.

There are some good examples of Web3 companies that care about CI/CD and supply chain security and invest resources to improve it. Metamask’s GitHub Actions configurations are a good example.

Use GitHub Deployment Environments and a Dedicated Release Role

GitHub supports deployment environments, which allow maintainers to add additional controls such as which branches can deploy to it and even add bake times. Maintainers can also save secrets to deployment environments, so any user with write access to the repository cannot use a tool like Gato-X to quickly dump all of the secrets.

Similarly, AWS supports specifying a specific deployment environment in a repository for OIDC trust permissions. If other workflows in a repository try to assume a role that requires a deployment environment, then it will not work.

Using a dedicated role that only has access to the production staging bucket, and then using a deployment environment with 2PR required will prevent a single developer compromise from impacting the production release process.

Deploy from Attested Build

Safe should split its release workflow into two. The first one should build and sign an artifact with attestation. The second should download the signed artifact, verify that it matches the provenance, and then deploy artifacts from it without building any new code.

This reduces risks associated with performing the build (which could include installing dependencies) in the same workflow job with access to production release credentials.

Eliminate Cache Consumption in Releases

GitHub actions caches are intended to speed up builds for testing. Using them in production builds reduces integrity because they risk introducing new code into the build that is not reflected in source code.

Safe could update workflows and reusable actions in their repository to disable consumption of the actions cache if the build is a production release.

References

- /2024/05/06/the-monsters-in-your-build-cache-github-actions-cache-poisoning/

- https://docs.github.com/en/actions/security-for-github-actions/security-hardening-your-deployments/configuring-openid-connect-in-amazon-web-services

- https://docs.github.com/en/actions/managing-workflow-runs-and-deployments/managing-deployments/managing-environments-for-deployment